We are living in an age where it is impossible to avoid the questions of what A.I. can do and, rarely, cannot do. Machine learning approaches are increasing the speed and scale of the phenomena that can be studied, and it is almost certain that A.I. will affect a broad range of scientific research in profound ways, including identifying new cell classes, drug candidates in health care, exoplanets in astronomy, and designing materials with desired properties. It is hard to overestimate the value of such help. AI could do in days what could have taken years for multiple teams.

The pace is unnerving. Maybe it is time to step back a bit and try to grasp the big picture and understand how we got here. Or, if you see yourself as a user, perhaps it is time to understand A.I.’s limitations.

Here I explore the evolution of science and technology, and how we got to the point we are today. Most of these points, until reaching A.I., are covered in my book The Nexus; the accompanying figure condenses those points. The objective of this breezy exposition is to generate questions that should be explored in a deeper way.

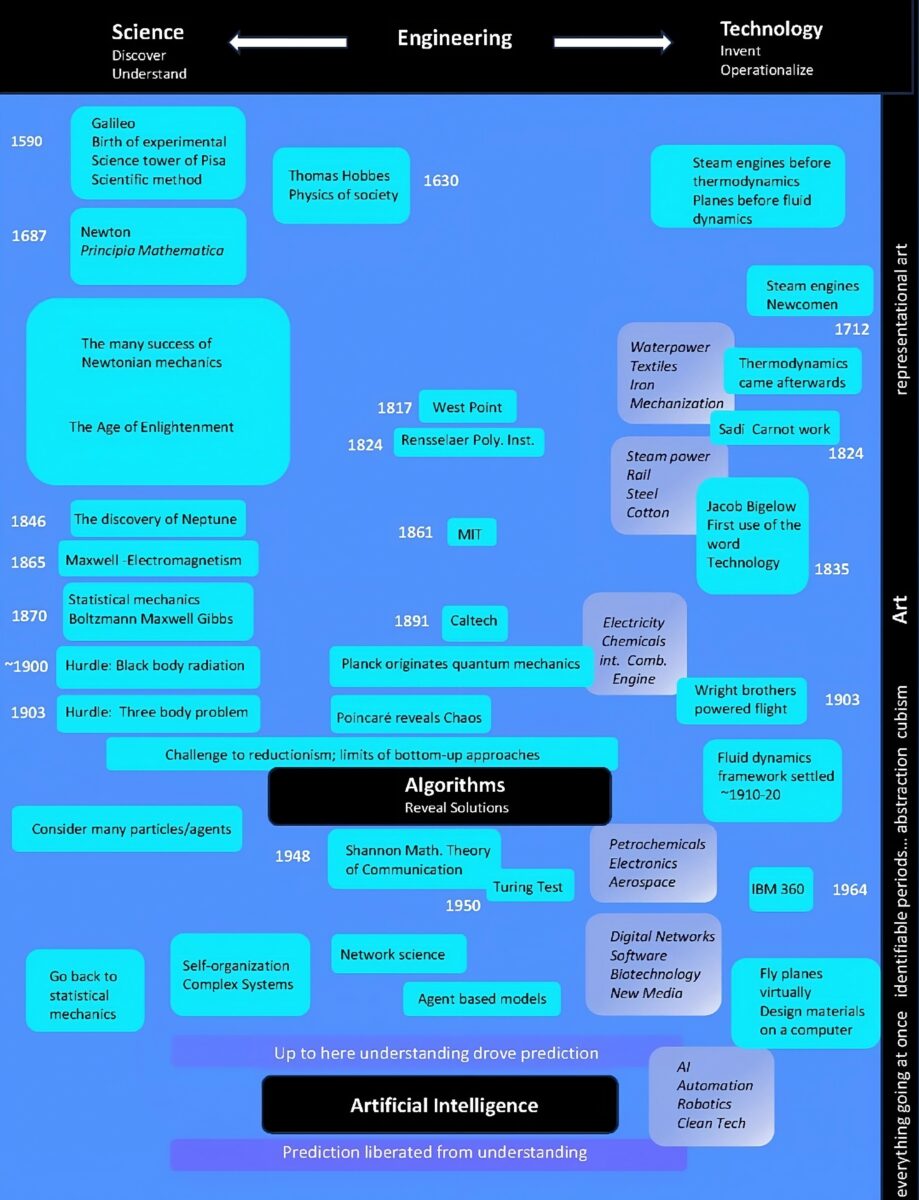

The Parallel evolutions of science and technology. Science starts at the top left with Galileo and Technology at the top right, with steam engines. All the points in the figure are covered in The Nexus. The key point is to show the almost sudden transition from a world where prediction is driven by understanding to the new AI-driven reality were prediction becomes independent from understanding. » Download

Our imperfect understanding

Let’s start at the beginning. We think we know what science and technology are and how they work, since we are surrounded by them. But our understanding may not withstand scrutiny. A lot of technology, especially software, is hidden from view, and people are not aware of what happens underneath. We may believe that we know what engineering is or what it does, but I would argue that most of us don’t. This limited understanding becomes a problem when making value judgments.

Beyond that, things get even fuzzier with chaos, complexity and complex systems science, network science and, in the last few years, data science (the suffix “science” inevitably added to bestow credibility to new areas). And after that, things get even fuzzier with A.I., which contains machine learning which, in turn, contains deep learning. More terms continue to appear, like generative A.I., large language models, and more will surely follow. I would further argue that even the concept of modeling is unclear, and that comments about science such as, “It is just a theory,” mean that we do not understand what a scientific theory actually is.

Today’s available machinery would have been unthinkable just a decade ago, and big questions await us. But the machinery is getting ahead of us. It is increasingly difficult to predict what A.I. can and cannot do, and how to explain how certain results were achieved. Regardless of our fields, all of us need context and to be aware of the big picture. We should be involved in understanding how things work and in the link between prediction and understanding.

Seeing the evolution of science and technology

It is educational to see how the combination of science, engineering, algorithms, and technology got us to where we are today. It is a tall order to explain all of this in one poster. But limitations notwithstanding, I have attempted it, placing the big components — even art — in parallel timelines.

Let’s start with the widespread belief that science has been and is at the root of all technological progress. Many people believe science drives technology. Sometimes, this has been true. But often, and especially in the past, this was not the case. We have used technology since the Iron and Bronze Ages with only trial and error to guide us. We were happily making steam engines and driving the Industrial Revolution for well over 100 years before the scientific bases of thermodynamics were put in place. We had powered flight before understanding how an airwing works — the concept of lift and fluid dynamics.

Compared with technology that has been with us for several millennia, science itself emerged in the blink of an eye, in historical terms, in the last 500 years or so. Galileo’s experiments can be credited with the birth of the scientific method, and Isaac Newton with the power of math and theory to give us an understanding of seemingly everything on Earth and beyond. We could explain tides and how planets move. From the basic equation of fluid dynamics, we could predict weather increasingly accurately. The Newtonian picture looked complete until James Clerk Maxwell gave us electromagnetism and Einstein gave us relativity.

Out of hurdles, advances

The resolution of issues encountered by Newtonian mechanics laid the foundation for two powerful ideas. First, trying to resolve the problem of black body radiation, seen at the time as a minor hurdle for Newtonian mechanics, instead generated the radical idea that energy was not continuous, proposed by Max Planck. Out of that came the building of quantum mechanics, and with it came nuclear power and microelectronics.

The second idea was that if we understand building blocks, we could understand ensembles of those building blocks. It seemed at first that if we understood the laws governing building blocks, then put those blocks together,voila, the properties and behavior of the whole would emerge. So powerful was this idea that many wondered if it was possible to find laws governing human behavior and how humans organize themselves.

It is hard to underestimate the faith that once we had in Newtonian mechanics. We found a new planet, Neptune, with pencil and paper calculations, because we could not believe that Newtonian predictions could have been wrong. But oddly enough, out of that success, came the finding of an inherent limitation. We could not predict the motion of more than two bodies — Earth, Sun, Moon — before something that had been hidden in plain view limited our ability for long term predictions: magnification of errors in initial conditions and chaos. After that came the question of what happens when we have multiple bodies. If we cannot handle three bodies, how could we handle thousands of them? Here statistical mechanics came in handy. But other insights appeared from things that have always been in front of us. Many have been mesmerized by flocks of birds or schools of fish that move together almost like a single organism. It took a while, however, to discover that there was no commanding bird or fish dictating the moves of the flock or school. The flock or school exhibit properties that cannot be deduced from the individual birds or fish.

And out of this came the realization that it is important to analyze systems as a whole. You could not understand this system by understanding its building blocks. Examining a system with a bottom-up, reductionist approach, so useful in many contexts, had limits. This brought us to the study of complex systems. Complexity deals with wholes. Rather than breaking problems into parts, studying each of them separately, and then putting them together, you must analyze the system as a complete whole. The defining concept of complex systems is emergence, collective behaviors that appear when collections of things — molecules, cells, fish, people — act as one. Molecules forming living cells, groups of cells controlling heartbeats, fish forming schools, people driving bull markets, and viral behavior arising in social media.

The unreasonable effectiveness of mathematics

Thankfully, algorithms and computer modeling have given us the tools to understand complex systems. Agent-based modeling (ABM), the discipline focusing on the study of systems where many independent entities (agents, in ABM parlance) simultaneously interact with each other, shows us the emergent patterns of behavior for the system.

Still, we needed to understand the pieces of the system to start. These models predict properties based on the understanding of the properties of the individual pieces. What comes out may surprise us, but we know what we put into the behavior of the parts.

This is where the combination of science, math, and algorithms have taken us. So remarkable has been this state of knowledge that in 1960 the Nobel Prize physicist Eugene Wigner wrote a paper with the title “The Unreasonable Effectiveness of Mathematics in the Natural Sciences,” making the point that mathematical theories often have predicted power in describing nature.

Prediction, up until this point, required some sort of understanding. But now, for the first time, we can predict without understanding, especially when deep learning got into the A.I. picture.

The figure, even though not to scale in time, makes clear how sudden this sudden transition has been.

Let us define what we mean by understanding, in a rather practical way, since this is a question that many get us into deep philosophical territory. Let us start by asserting that not all science-based predictions can be understood by everybody. No one in science would question the basic equations that govern the motions of masses of air and the many factors that go into modeling weather. In fact, it would be hard to question the effectiveness of these models and our ability to predict forms of inclement weather days in advance.

But things get complicated when we try to predict weather on longer time scales, when weather turns into climate. No model is free of assumptions, and disagreements will appear in the assumptions made to build the model. The models can be enormously complex, factoring in the behavior of aerosols, snow, rain, clouds, and the interaction between land, atmosphere, and oceans — effectively calculating all these interactions at once.

Only experts can understand some and, rarely, all the basic components that go into the large models. But we could in principle dig deeper and “see” what are the components that make up the simulation, the assumptions made, and question them. We can run the model with some of the components removed or change assumptions and explore what happens.

But as they are designed now, A.I. models do not allow such clean inspection.

The black box of A.I.

A.I.’s original goal, to understand how humans think, began as a top-down enterprise in which symbols were used to denote concepts as designated by humans. Researchers designed complex representations from these symbols, along with a range of mechanisms to reason about the ideas represented. But in parallel, aided by increasingly more powerful computers, a very different approach was taking hold: Machine learning — a form of statistical learning — which does not rely on the same kind of symbolic representation. Rather than asserting from the top how concepts are related, associations of features are learned bottom up. Data is fed into algorithms, which then detect patterns within it and use those patterns to make predictions.

The results have been spectacular. Perhaps the most exciting A.I. advance in science has been AlphaFold, which can accurately predict protein structures. Its predictions have been used in research in drugs, vaccines, and in understanding disease mechanisms. It is truly amazing what A.I. can do, making Arthur C. Clarke’s Third Law, “Any sufficiently advanced technology is indistinguishable from magic,” ever more real.

So, what is the problem with this? A solution is no better than the assumptions that have gone into it, and A.I. systems are often not built to explain how they came to a certain conclusion. It is hard to check why something emerges as an output in current A.I. machinery.

The possibility of emergence in A.I. systems

It used to be that one could build something like a ship and test the model for nearly all possible operating conditions. That is not possible with modern products. As soon as an iPhone reaches the hand of its owner, it becomes unique. No two iPhones out there are the same. In the same vein, it is impossible to test all combinations of settings in the operation of a modern jetliner. It’s hard to be completely sure that no strange behavior may emerge.

In fact, unexpected behavior may be revealed in large-scale models. For example, we can simulate the interactions between biodiversity, agriculture, climate change, international trade, and policy priorities. We can also simulate how air and land transportation networks affect epidemics and healthcare. These systems represent a higher order of complexity: systems interacting with other systems. These models capture multiple interactions and feedback loops to explore possible unexpected, emergent behaviors. To this end, we can tweak parameters and explore scenarios that may lead to optimal policies. This falls under the discipline of “systems of systems engineering.”

Could large A.I. models display aspects of emergence? (Large A.I. models include billions of parameters; GPT-3 has 175 billion parameters; Google’s PaLM can be scaled up to 540 billion.) On some tasks, various model’s performances improved smoothly and predictably as size/complexity increased. And on other tasks, scaling up the number of parameters did not yield any improvement. But surprisingly, on other seemingly trivial tasks, such as adding three-digit numbers or multiplying two-digit numbers, results have suggested comparisons to biological systems. Larger models seem to gain new abilities spontaneously. These cases seem to be less amenable to inspection.

This leaves us in uncharted territories. A.I. will affect everything.

It used to be that predictions depended on understanding. For the last two centuries or so, that has been the unstated foundation of most advances in technology. But we should recall, as well, that for hundreds of years, we were designing systems that worked well without having the scientific bases to understand their functioning.

But the advent of A.I. represents a seismic shift in enabling prediction without understanding. A.I. can make accurate predictions and achieve remarkable results without necessarily grasping the underlying reasons or mechanisms.

On one hand, this could be a boon. An age of prediction free from understanding. On the other, our lack of understanding could mean we lose control of the outputs altogether.

As A.I. systems become increasingly complex, with billions of parameters, they can or could exhibit spontaneous emergence of new abilities. This emergence could be good or bad. But in any case, this uncharted territory raises questions and potential concerns about the limitations of our understanding and control over these powerful A.I. models, as the models themselves become increasingly more sophisticated and capable but also more impenetrable.

This is an issue we must deal with now. The speed of this change becomes even more dramatic when we put science, engineering, algorithms, and technology in a timeline. Science is a bit over 400 years old; the word technology did not exist until less than 200 years ago. Look at what has happened in the last 150 years, then look at what has happened in the last 15 years. Time scales are now compressed. Change will accelerate. The combination of lack of transparency and possible unwanted emergence represents a significant challenge.

It is difficult to suggest concrete actions, but being mentally aware of the impending tsunami of A.I.-incursion in imagined and unimagined spaces may prepare us best for what actions we may need to take.